Content Censorship via Cycle Consistency

Cycle Consistency

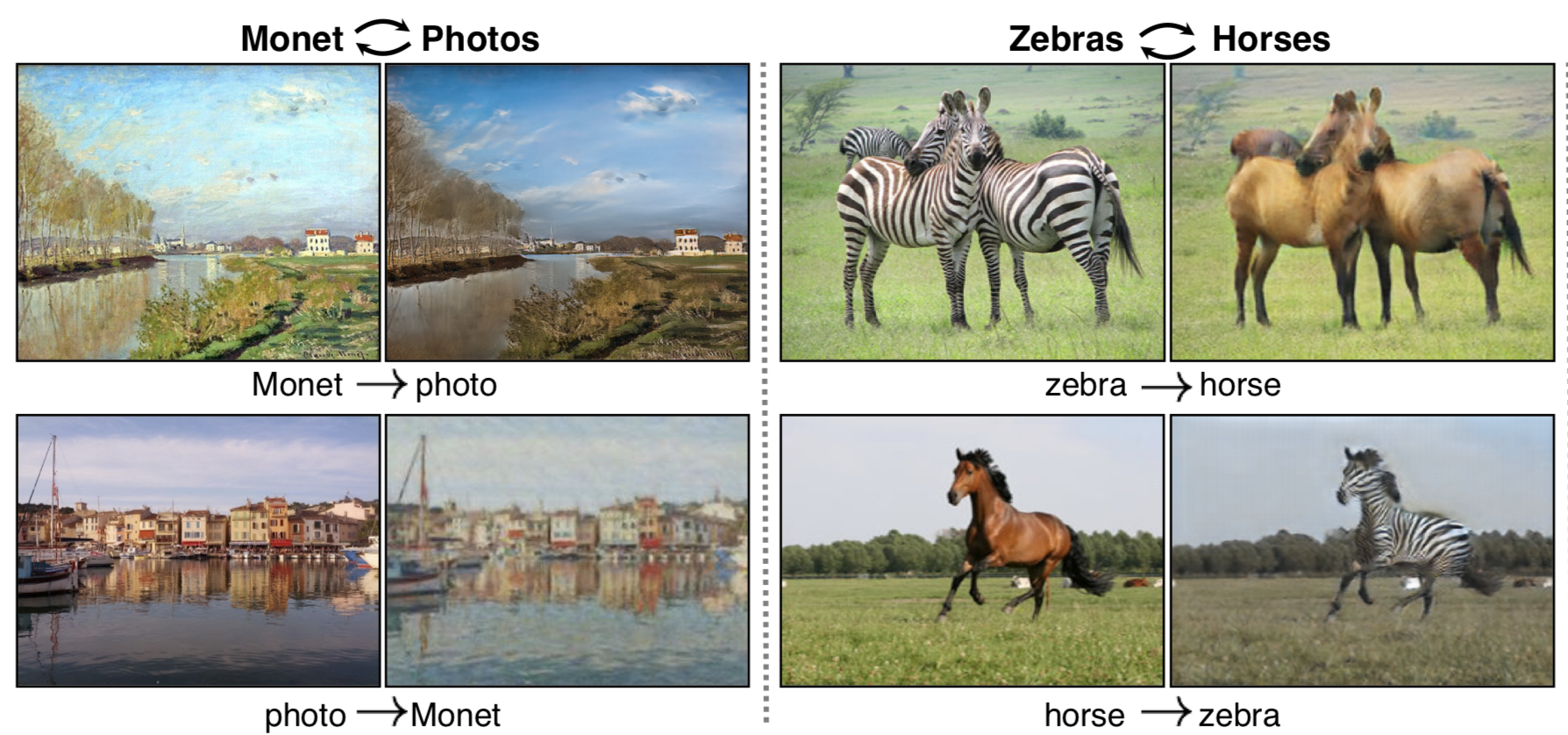

Zhu et al.1 introduced the cycle consistency loss to encourage a pair of generative adversarial networks to learn the mappings \(F: X \mapsto Y\) and \(G: Y \mapsto X\) such that \(X \approx G(F(X))\) and \(Y \approx F(G(Y))\).

\begin{equation} \mathcal{L}_{cyc}(F, G) = \mathbb{E}_{x \sim p_{data}(x)} \Big[ \lvert\lvert G(F(x)) = x\rvert\rvert_1 \Big] + \mathbb{E}_{y \sim p_{data}(y)} \Big[ \lvert\lvert F(G(y)) = y\rvert\rvert_1 \Big] \end{equation}

They showed how to impose this loss on image GANs to do neural style transfer and translate horses into zebras among other applications.

In general, this technique can be applied to function mappings between two domains and is not limited to images or use in GANs.

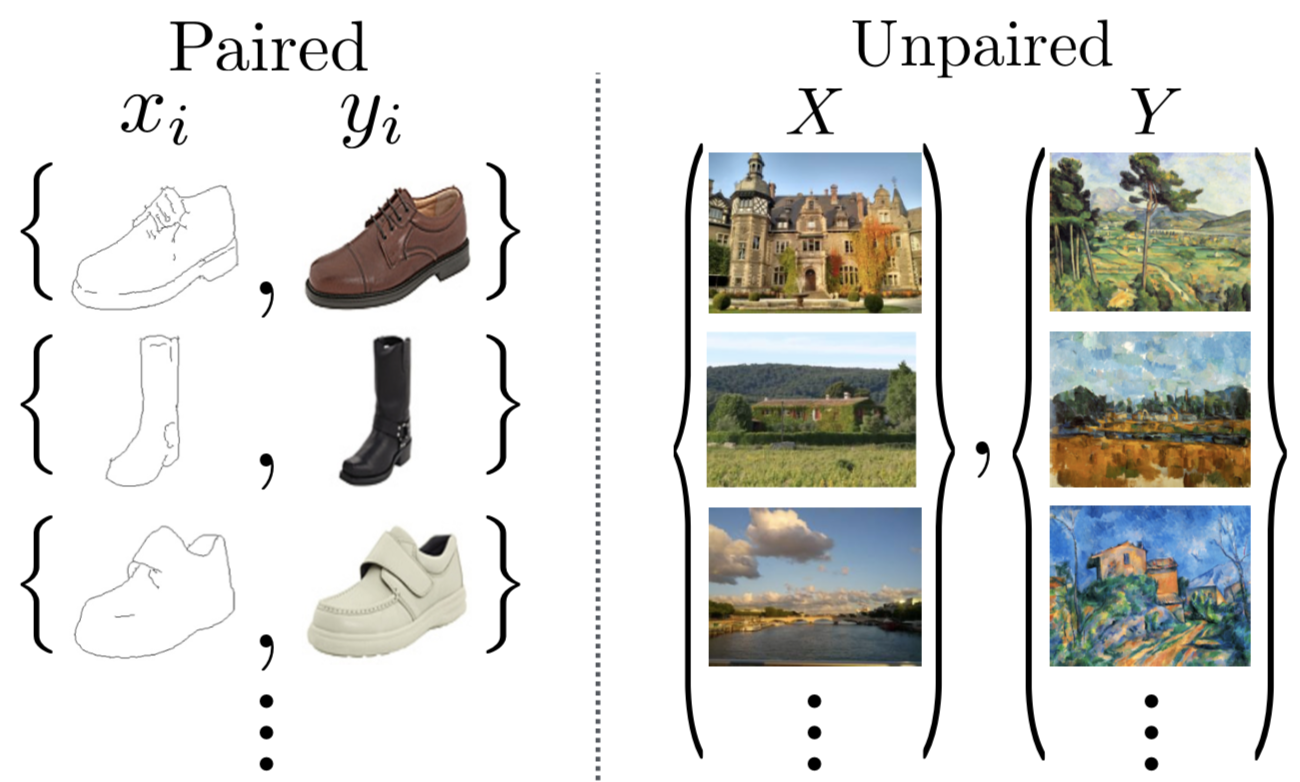

It requires a paired domain, but not a dataset of paired datapoints. This is very useful, since pairs of data points are difficult to collect and curate, whereas pairs of domains are easy.

We look at two examples of how the cycle consistency technique can be used as an effective tool for content censorship.

Example One: Pornography

In Seamless Nudity Censorship: an Image-to-Image Translation Approach based on Adversarial Training, Wehrmann et al. used cycle GANs to learn the mapping between a set of images of naked women and a set of images of women wearing a bikini.

Their method can then be used to automatically censor nudity without introducing artifacts such as black bounding boxes or blurs into the image.

Example Two: Offensive Language

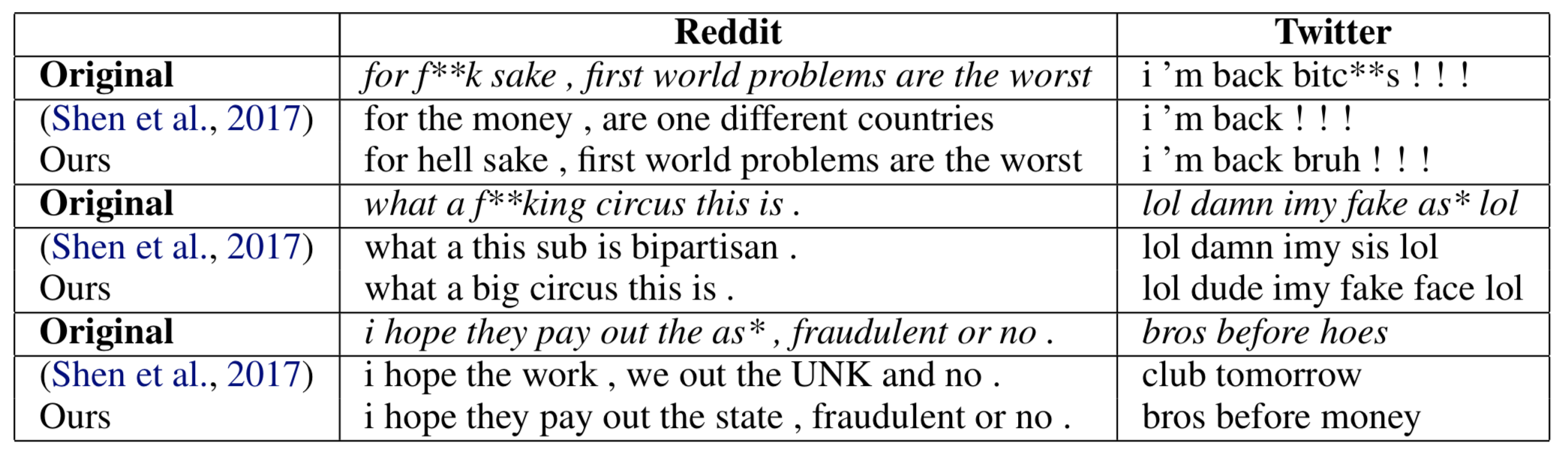

In Fighting Offensive Language on Social Media with Unsupervised Text Style Transfer, Dos Santos et al. used a cycle encoder-decoder architecture to learn the mapping between a set of offensive sentences and a set of non-offensive sentences.

Their method can then be used to automatically transform sentences (from social media) with offensive language in them into sentences without.

These two examples demonstrate the efficacy of cycle consistency as a general technique that can be used for content censorship in lieu of content removal. Specifically, if we have a classifier that can sort out bad content from good content, then instead of using it for content removal, we can repurpose it to collect two distinct domains of data for content censorship via cycle consistency.

(Obviously, this technique can also be used by malicious actors to transform good content into bad content. The paper in the first example had a Figure demonstrating the reverse process, turning a bikini-clad lady naked.)

-

Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. Jun-Yan Zhu, Taesung Park, Phillip Isola, Alexei A. Efros. ↩